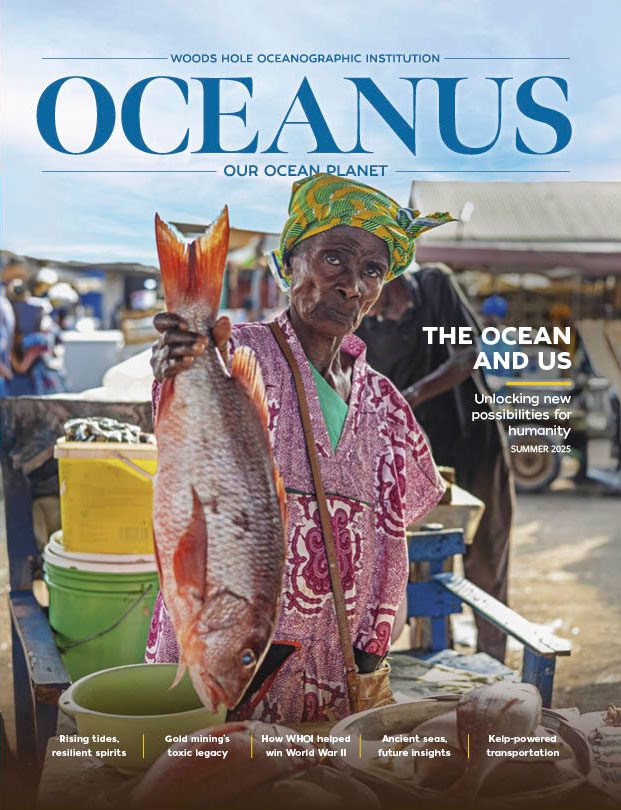

This article printed in Oceanus Summer 2023

This article printed in Oceanus Summer 2023

Estimated reading time: 6 minutes

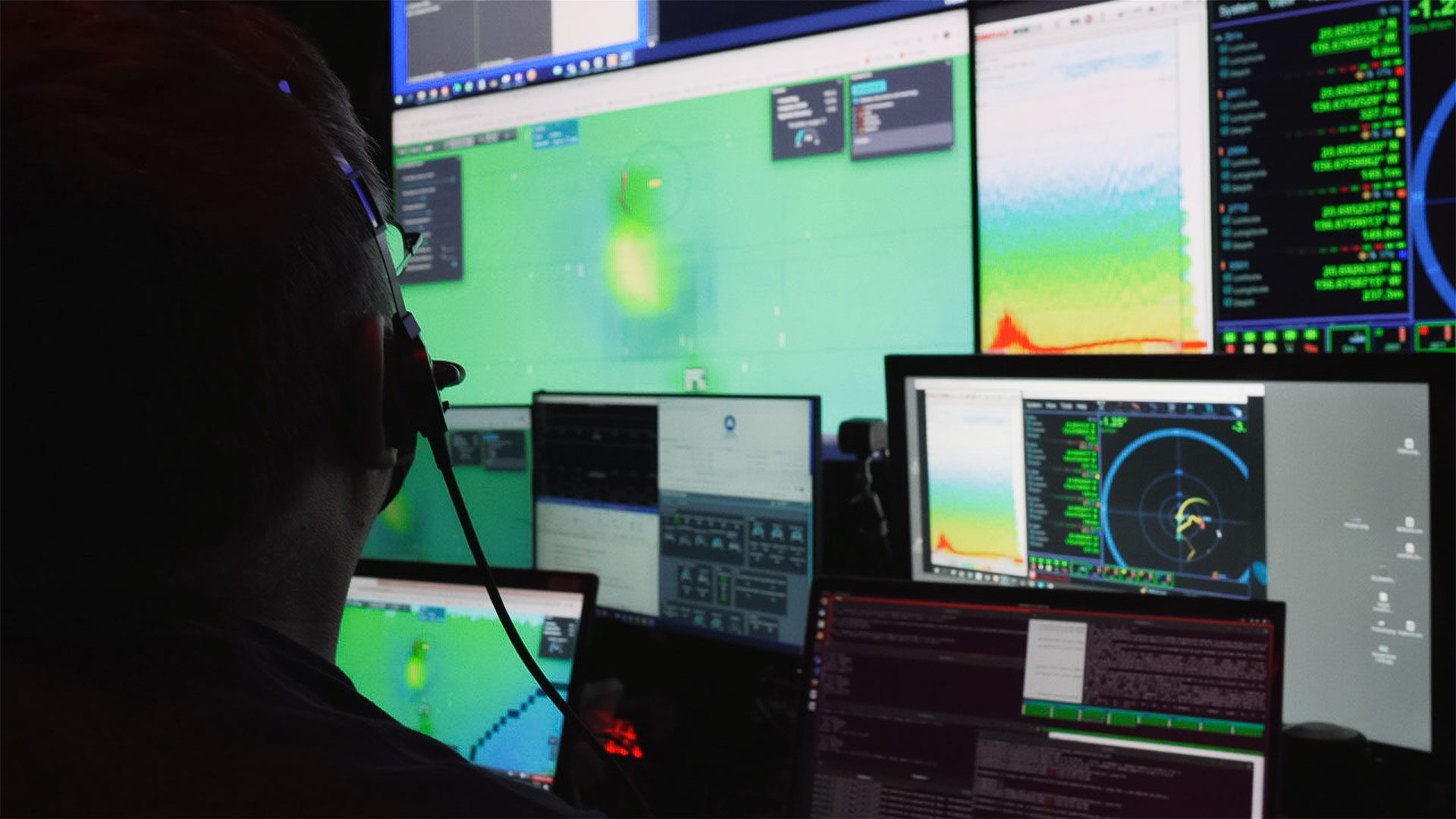

From the banks of control room monitors on the E/V Nautilus, scientists spotted the 75-meter-high seamount jutting out of the seafloor like a spire. It came into view after DriX, a fire-engine-red surface robot operated by the University of New Hampshire (UNH)’s Center for Coastal and Ocean Mapping, echolocated it with high-resolution sonar.

The seamount was worth a closer look, the scientists thought, particularly since there was also a dense layer of marine life swimming nearby. The team sent commands to DriX, which positioned itself above the pinnacle and began circling the sea surface like a frenzied shark. Then, as if straight out of a sci-fi film, it began delegating tasks of its own to a pair of underwater robots below.

University of New Hampshire’s DriX surface robot runs high-resolution sonar through the water column while circling the sea surface like a shark. (Photo by Marley Parker, Ocean Exploration Trust)

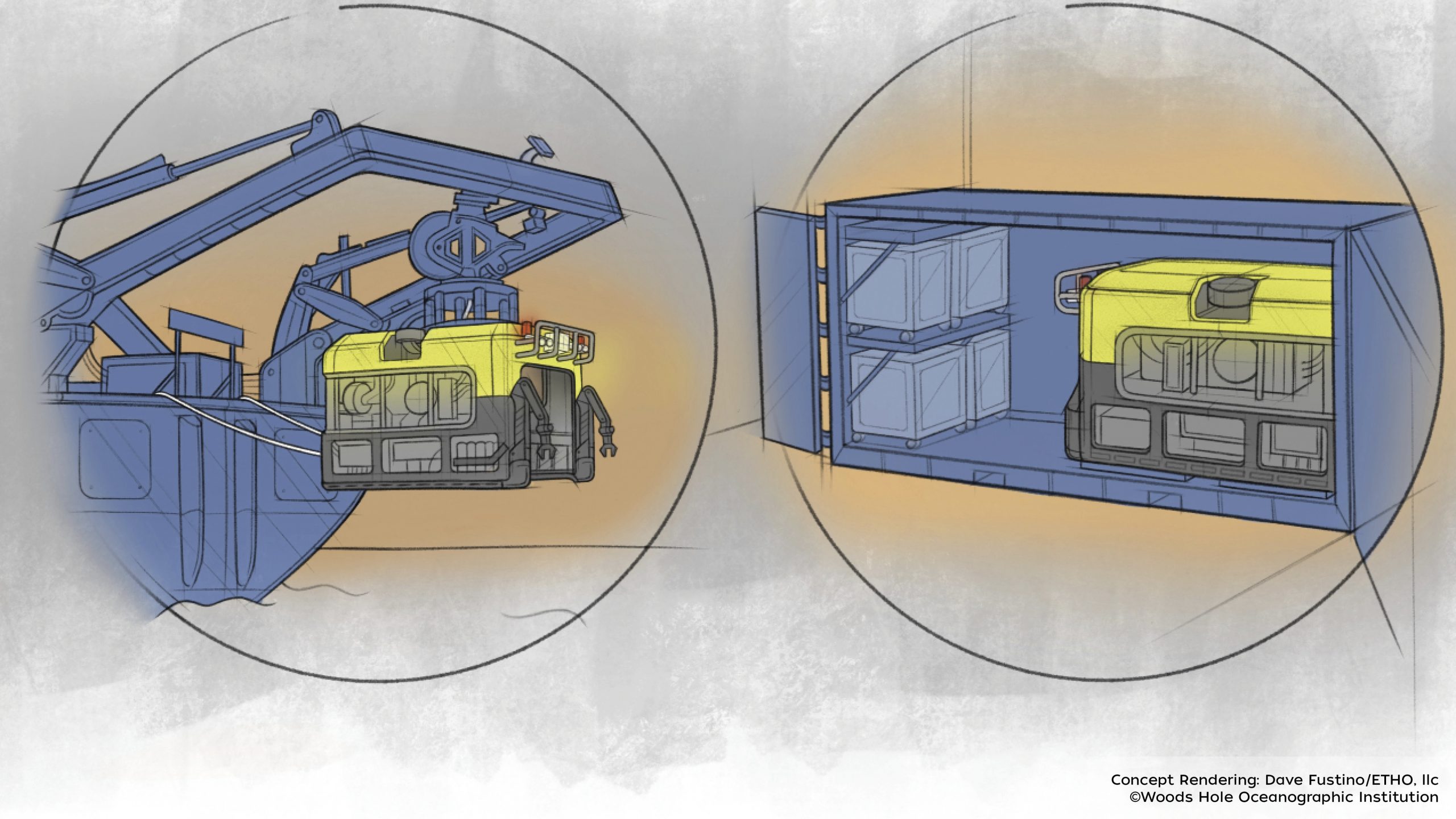

DriX sent its first set of commands to Mesobot, a yellow-and-black ocean robot developed collaboratively by WHOI, the Monterey Bay Aquarium Research Institute, Stanford University, and the University of Texas Rio Grande Valley. The robot investigates life in the ocean’s dimly-lit twilight zone, and uses a combination of advanced camera and lighting technologies to slowly (and quietly) follow individual animals through the water column.

Once Mesobot received the text-like commands from DriX from a few hundred meters below, it propelled over to the seamount. There, it began sampling seawater to collect environmental DNA (eDNA, or “genetic fingerprints” from marine life) and capture video of animals as they swam through the ocean’s midwater.

Then, to get a picture of what was happening down on the seafloor, DriX began dishing out a different set of text commands to a second WHOI robot, Nereid Under Ice (NUI). NUI is a hybrid robot, meaning it operates as a remotely-operated vehicle (ROV) from ships via a hair-thin fiber optic tether, or as an autonomous underwater vehicle (AUV) without a human piloting it.

NUI began its investigation of the site in ROV mode, capturing real-time video and sampling while the Nautilus was off in the distance. As the science team watched the footage from the ship, they could see a patch of water a few meters above the seafloor began to shimmer—a freshwater plume had escaped from below the seafloor and was now gushing straight up into the water column. NUI proceeded to ascend the steep inclines of the seamount, capturing additional video while passing fish, eel, tunnels, and cliff faces along the way.

Shortly after, NUI transitioned to AUV mode, taking all of its commands from DriX circling above. This allowed the science team to prompt NUI for images, bathymetric data, and other measurement data it was collecting.

Suddenly, those aboard the Nautilus realized what they were witnessing: three ocean robots were working together as a team to explore different dynamics of the ocean as the freshwater plume event was unfolding—all at the same time.

“Multi-vehicle collaboration represents a whole new paradigm for ocean robots and at-sea operations.”

—WHOI research engineer Robin Littlefield

“Multi-vehicle collaboration represents a whole new paradigm for ocean robots and at-sea operations,” said Robin Littlefield, one of the WHOI roboticists aboard the Nautilus for two weeks of ocean technology integration testing off Oahu, Hawaii in May, 2022.

The expedition was aimed at answering a central question: What’s possible when a group of robots starts exploring and working on science questions together?

During this particular dive, the science team came away with a snapshot of how something like a freshwater plume can affect things like water density and animal behavior in different layers of the ocean. But more generally, the robotic teamwork allowed for the ability to explore more things in less time.

“Autonomy is important as it takes pressure off human operators,” Littlefield said. “If robots can be communicating and working together, people will no longer have to commit all of their time to overseeing missions and can be freed up to add value in other ways.”

Ships can also be freed up, according to Eric Hayden, an engineer on the Mesobot team.

“When you have a ship that costs tens of thousands of dollars a day having to stay within the range of a robot like Mesobot, it has to babysit that operation and that limits the other things a ship can do, like deploying other vehicles and instruments at sea,” Hayden said.

During the freshwater plume investigation, the science team was able to not only get a read on Mesobot’s location from the ship monitors, but view the scattered clouds of biomass surrounding it as well.

“This allowed us to do this super-targeted sampling of the twilight zone fish and zooplankton we were interested in looking at,” said Hayden.

Annette Govindarajan, a molecular ecologist at WHOI and the eDNA lead, was working remote from her lab in Woods Hole at the time and could see everything in real time on her own monitor. “It allowed us to really know what we were sampling instead of just sampling blindly and hoping you get what you’re interested in,” she said.

The ability to direct Mesobot in real time based on its location, Govindarajan said, is important because animals aren’t distributed evenly throughout the twilight zone.

“Our goal was to sample above, within, and below the patches of biomass we were seeing, so we can use eDNA techniques to later figure out what specific animals make up those layers,” she said.

Getting three robots to “text” below the surface was among the biggest tech challenges the team faced during the expedition. Wi-Fi and radio communications don’t work underwater, so they had to rely on underwater acoustic technology.

Val Schmidt who leads the marine robotics group at the University of New Hampshire, said the size of the text-like commands was critical. “We needed to make the messages extremely small so they could get from the acoustic modem of one robot to the other,” he said. “We compressed them down to the bare minimum of any command that needed to be transferred.”

And because Mesobot “speaks its own language” Schmidt said, the team had to develop a library for DriX so it could provide commands that Mesobot understood.

The testing off Oahu, which involved more than 30 dives over hundreds of hours, proved the multi-robot integration concept.

“Investigating the surface, midwater, or seafloor alone is like reading a random chapter in a story book—it is likely interesting, but you don’t know the whole plot,” said Molly Curran, a mechanical engineer at WHOI and Expedition Lead for the NUI team. “When we have this big picture view of all three together, we begin to create a real story of the ocean with so much more depth and insight.”

Now, the team plans to add more autonomy into the mix when they head back to Oahu in Fall 2023.

“Humans were very much in the loop on this demonstration,” said Littlefield, “but in the future, we hope to use artificial intelligence to inform onboard decision-making so the robots can decide what’s important and explore on their own. That will open the door for more robots in the ocean at one time, and for them to have a persistent presence there collecting data and informing us on the condition of our oceans.”

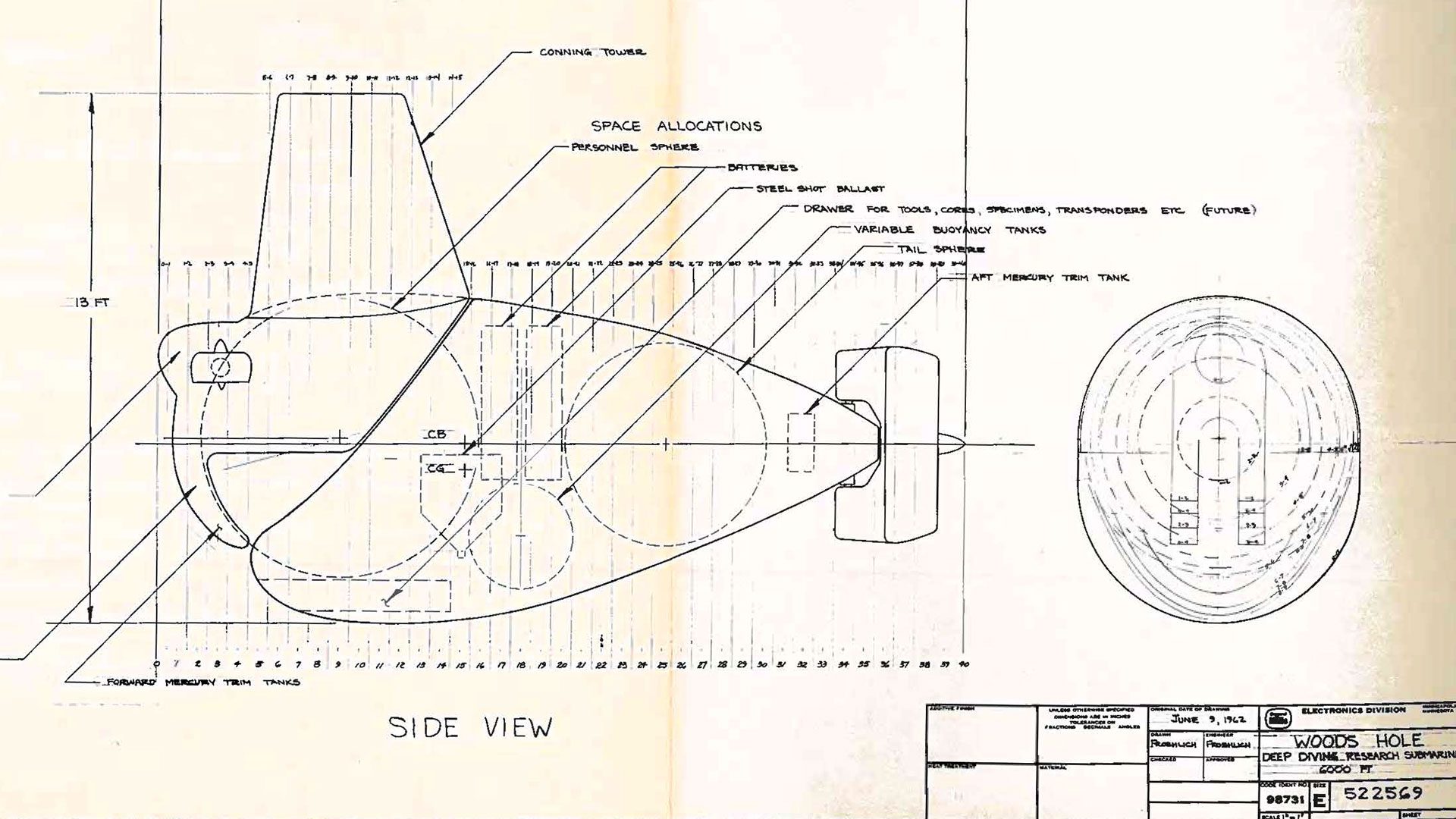

This expedition, led by Jason Fahy from the University of Rhode Island’s Graduate School of Oceanography, was a partnership between Woods Hole Oceanographic Institution (WHOI), the Ocean Exploration Cooperative Institute (OECI), the University of Rhode Island, the Ocean Exploration Trust (OET), the University of Southern Mississippi, the University of New Hampshire, and primary funding partner National Oceanic and Atmospheric Administration (NOAA) Ocean Exploration. Lead scientists included WHOI senior scientist Dana Yoerger, WHOI principal engineer Andy Bowen, WHOI research specialist Annette Govindarajan, and director of the UNH’s Center for Coastal and Ocean Mapping, professor Larry Mayer.