Estimated reading time: 7 minutes

Ecologists face the challenge of how to study animals that live in remote environments. Accessing them is one issue. But once we get to their location, how can we learn about these organisms—their behavior, habitat use, and connection to the rest of the ecosystem—without changing their behavior simply because we are present? To address this concern, ecologists are teaming up with physicists and computer scientists to generate novel ways to do science.

“Our aim is always to do more science with less impact,” says Daniel Zitterbart, head of WHOI’s Marine Animal Remote Sensing Laboratory. His lab uses a variety of sensors—and now a robot named ECHO—to study emperor penguin behavior.

Frigid winds sweep across the Antarctic landscape, buffeting emperor penguins on the outside of a huddle. The birds—all males—each care for a single egg tucked under a warm fold of belly. It’s tricky business, keeping that egg toasty in such harsh conditions, and males huddle together for warmth. But birds on the windward side feel the cold. After a while, they waddle downwind to a more protected location.

Scientists don’t understand the dynamics of huddling, or the extent to which bird health influences huddling behavior. These are two questions Zitterbart’s team hopes to address. More importantly, they seek to understand the Antarctic ecosystem, as a whole. Emperor penguins are ideal subjects to help answer such questions.

“Emperor penguins are at the top of the food chain,” Zitterbart says, so they reflect what’s happening at lower levels of the marine food web. “They [also] swim far from their home colony and sample the ocean for us, and bring this information back to their home colony where we can study them.” The results of the current project will help determine locations to be set aside as Marine Protected Areas, and changes in behavior on land can provide information about how these birds are adjusting to a changing climate.

Each year, the researchers tag 500 chicks. Like the microchips put in pets, the tags identify individuals, providing a record of who’s in the colony at any given time. The challenge, however, is reading the tags. The scanner must be within a meter or two of the tag to read it, and people can’t wander through the colony without disturbing the birds. The team needed something that could move among the birds, reading tags as it went.

Enter ECHO. This one-meter-tall robot, disguised as a mound of snow, can travel to, through, and around the colony. Every time it gets close to a tagged penguin, it records the information and relays it to SPOT, a nearby observation platform equipped with cameras and Wi-Fi. ECHO can be remote-controlled when needed, but it also works autonomously, moving slowly enough to avoid alarming the birds.

"All the automation is in support of having less humans working in the colony and ideally leaving the animals alone from our impacts.”

—WHOI scientist Daniel Zitterbart

Placing ECHO downwind of a huddle also allows it to collect data on huddle behavior. As upwind birds shift position, the entire group slowly moves toward ECHO, eventually enveloping it. By reading radio tags throughout the process, ECHO can help paint a picture of individual movement within the larger mass.

“All the automation,” Zitterbart says, “is in support of having less humans working in the colony and ideally leaving the animals alone from our impacts.”

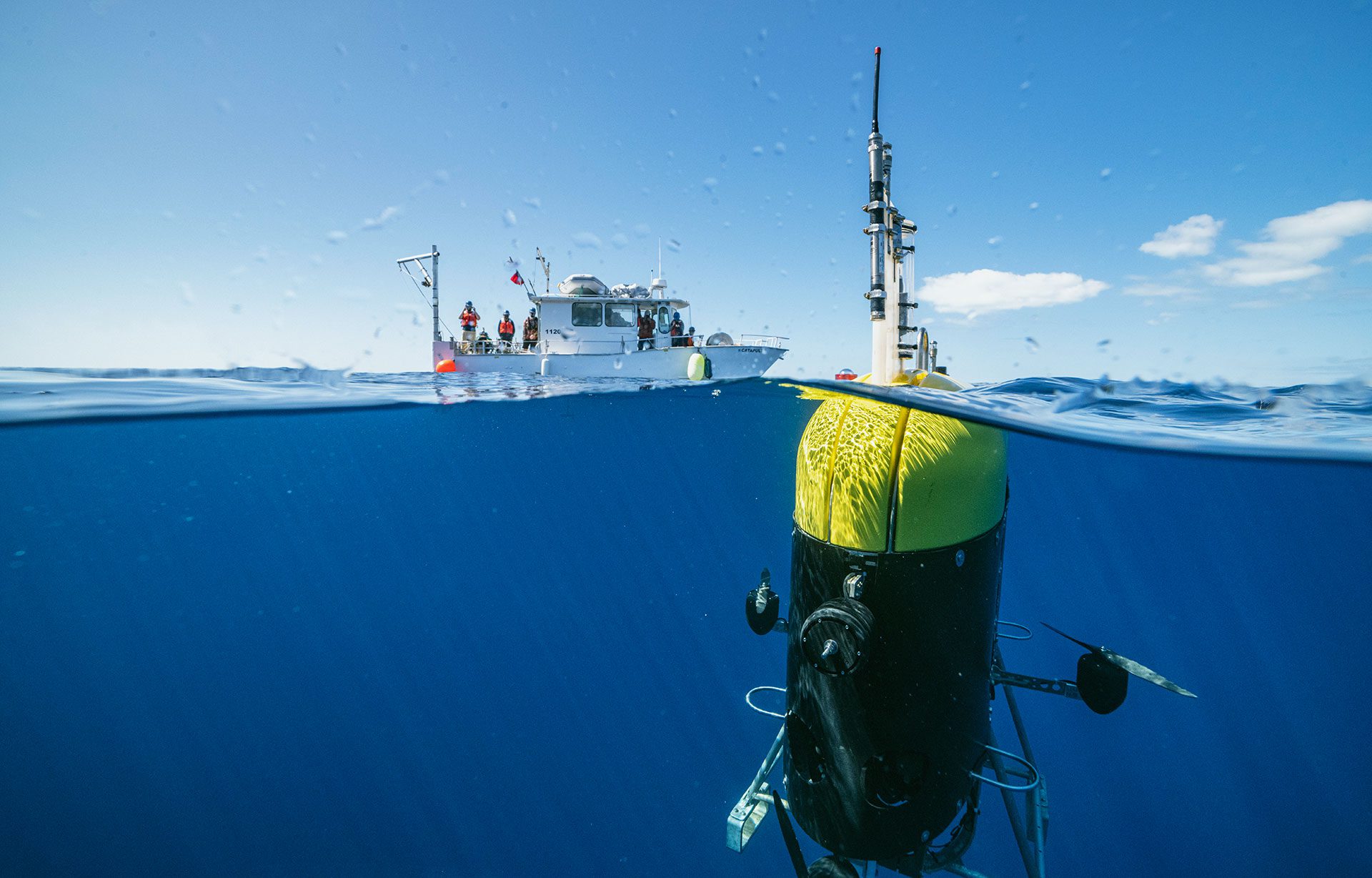

WHOI scientists and engineers test AUV Mesobot’s improved imaging and light sensors in the waters off the coast of Bermuda to prepare for future missions in the Ocean Twilight Zone. (Photo by Marine Imaging Technologies LLC, © Woods Hole Oceanographic Institution)

Journey through the Twilight Zone

The mesopelagic, or twilight zone, spans the globe, ranging from 200 to 1,000 meters of ocean depth. “For you and I, starting at 200 meters, everything’s just going to look black,” says WHOI engineer Eric Hayden. Our eyes can’t detect the tiny amounts of sunlight that manage to enter the twilight zone. One in ten photons makes it to 200 meters. None survive beyond 1,000.

Despite—or perhaps because of—the lack of light, this zone is home to some of the richest marine life on the planet. The animals that dwell here are sensitive to small changes in light. As the sun sets, the world’s largest migration begins: Most of the animals in the twilight zone follow the light to the surface where they can feed under cover of night. As the sky begins to brighten near dawn, they descend again to hide in darkness.

Researchers have known for decades that this migration occurs, but studying the organisms that make the journey has been a challenge. Until now. Researchers at WHOI, University of Texas Rio Grande Valley, Monterey Bay Aquarium Research Institute, and Stanford University teamed up to create Mesobot, an autonomous robot uniquely suited to studying marine life in the twilight zone.

Mesobot is equipped with radiometers that allow it to “see the difference in light between, like, 500 meters and 600 meters,” Hayden explains. This allows Mesobot to move vertically with the light levels, journeying alongside deep-water organisms as they make their trek to the surface and back. As it goes, it can track animals of interest and take high-quality photos for researchers to study.

Mesobot is outfitted with three cameras: A high-definition “science camera” is flanked by two monochrome stereo cameras that give Mesobot binocular vision, allowing it to home in on objects and keep them in sight. Biologist Annette Govindarajan’s laboratory also uses Mesobot as a platform to collect samples of environmental DNA (eDNA) from the water column in order to identify what species are migrating.

“Because Mesobot's movements track with a parcel of water, it provides an opportunity to study temporal biodiversity changes in that parcel,” says Govindarajan. “We’ve taken time series of eDNA samples before, during, and after the evening migration, and will analyze that data in conjunction with the other data that Mesobot collects.”

“A good way to think of eDNA is sort of like a crime scene,” Hayden says. “You’re not seeing exactly what is there. The cameras are, but [with eDNA] you’re seeing what has been there and in a lot of ways that’s more accurate, because it could be that animals moved out of the view of the cameras” and would be missed without taking samples.

In addition to revealing life forms that have otherwise remained a mystery, Mesobot’s missions will help researchers understand the role of mesopelagic animals in the carbon pump. “The mesopelagic layer is very important in getting carbon from the surface waters down,” Hayden notes. Animals move carbon from the surface during feeding, releasing it in deeper waters where it can sink to the ocean floor. “It’s important,” he says, “that we start to understand these animals that are doing a lot of work, sort of combating climate change.”

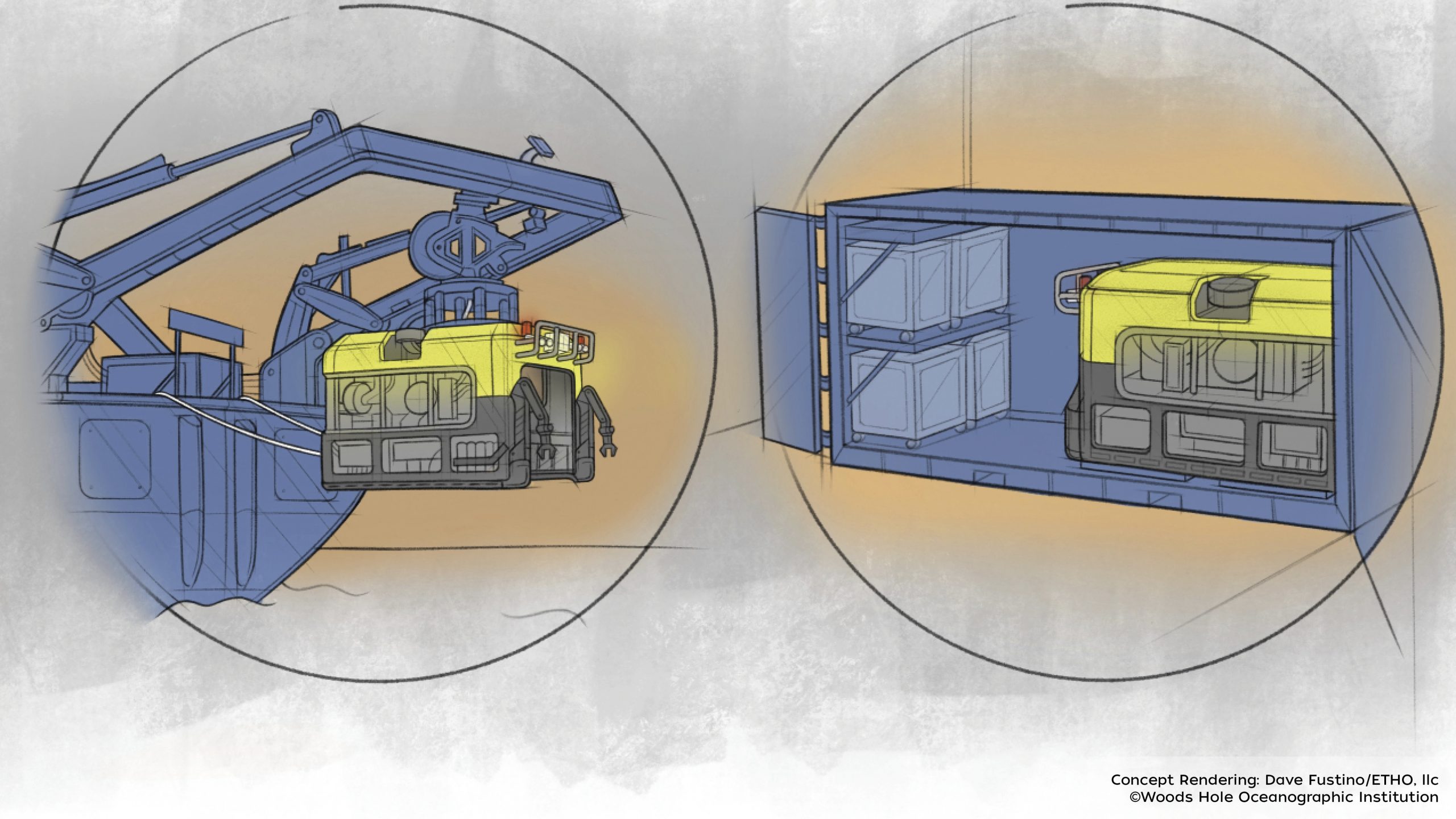

Topside operators can monitor CUREE’s position and video feed on a computer and instruct it to track apex predators like this barracuda to learn more about the reef ecosystem’s hierarchy. (Photo courtesy of the WARP LAB, © Woods Hole Oceanographic Institution)

A day in the life of a barracuda

Coral reefs aren’t always remote, but they are complex, and that makes reef research a challenge. Enter CUREE, a small robot developed to study animals in these complex environments. At the moment, CUREE is in the U.S. Virgin Islands, tracking a barracuda. The fish roams the reef and CUREE follows, keeping it in sight of its cameras and adjusting its distance and speed using six thrusters. After several minutes, the fish vanishes, and Levi “Veevee” Cai, a Ph.D. student at WHOI and MIT, selects a new animal for CUREE to track.

CUREE was designed to be visually and acoustically curious. Equipped with four hydrophones to detect sound, CUREE can turn itself in the direction of interesting noises and maneuver close enough for its cameras to pick up on the source of the sound, then follow an animal of interest to see what it does. At least, that’s the plan. CUREE is in the early stages of testing and development, but it has proven itself capable of listening to snapping shrimp and following animals ranging from jellyfish to barracuda.

CUREE still needs a bit of guidance from Cai via a tether. Once Cai spots an animal of interest, he directs the robot to track it, then lets it travel on its own, only jumping in to help if CUREE loses the focal animal. “We are slowly increasing the level of autonomy,” says Yogesh Girdhar, the WHOI computer scientist who initiated the CUREE project. “We have made good progress in the last few years.”

The idea is to eventually have robots that wander the reef in search of interesting animals, then track them for long periods of time without constant human supervision. These kinds of efforts can help scientists better understand the complicated suite of interactions that characterize a coral reef. “There are a lot of different species, lots of different habitats, and lots of interactions between them,” Girdhar says. “Computer scientists like this kind of complexity.”

Traditionally, scientists have had to tag organisms in order to track them, but the CUREE team hopes their efforts will do away with the need for invasive and time-intensive tagging efforts. “I think it’s going to change how we understand these ecosystems,” Girdhar says.