AI in the Ocean Twilight Zone

Deep Learning techniques are revealing new secrets about the mesopelagic

This article printed in Oceanus Summer 2023

This article printed in Oceanus Summer 2023

Estimated reading time: 6 minutes

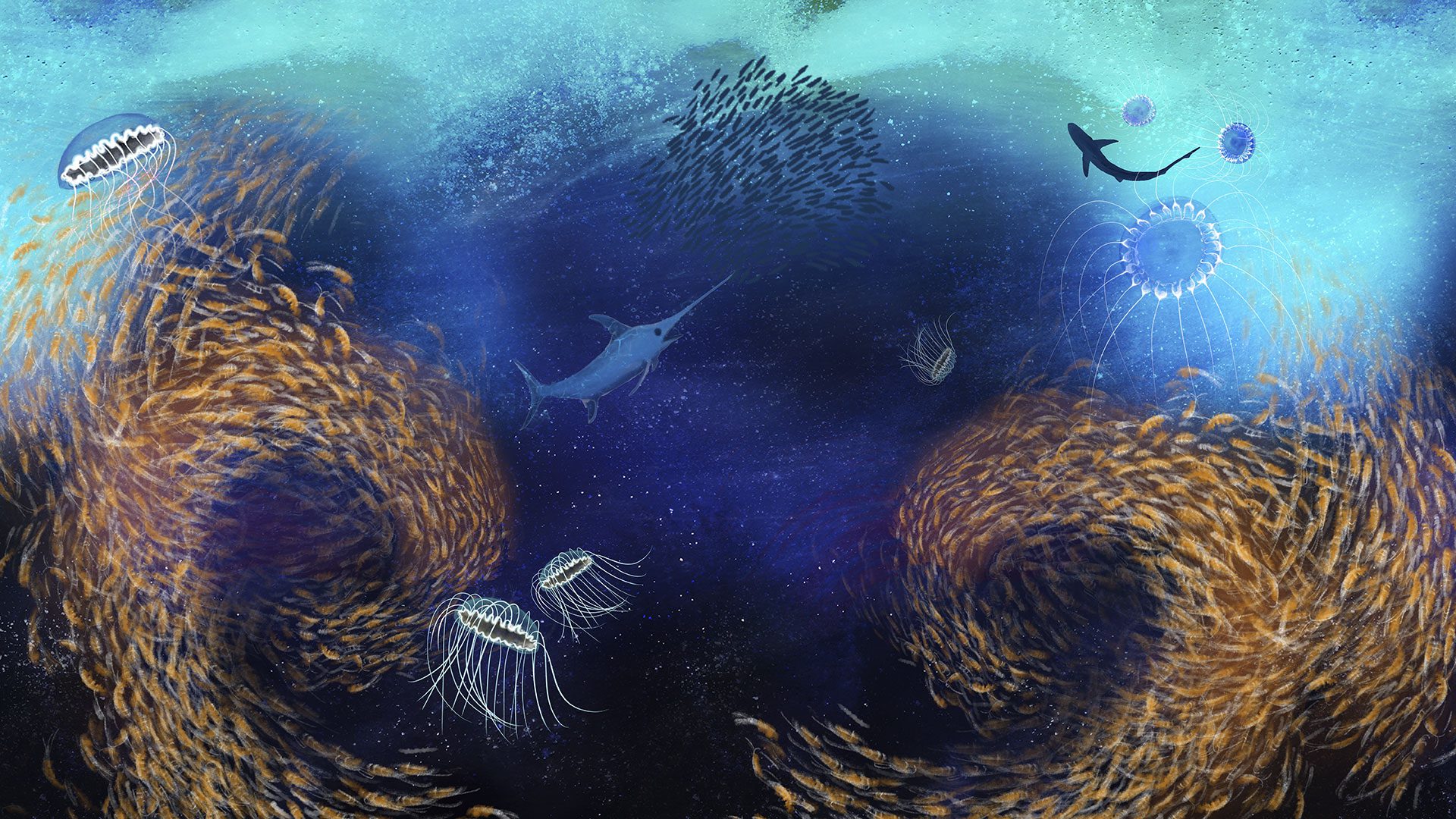

Neary six hundred feet (200 meters) beneath the ocean’s surface, where the last rays of sunlight start to disappear, lies the mysterious realm of the ocean twilight zone. It’s home to some of the most abundant animal life on Earth, and it could play a major role in global climate—yet due to its vast size and remote nature, it’s been woefully understudied.

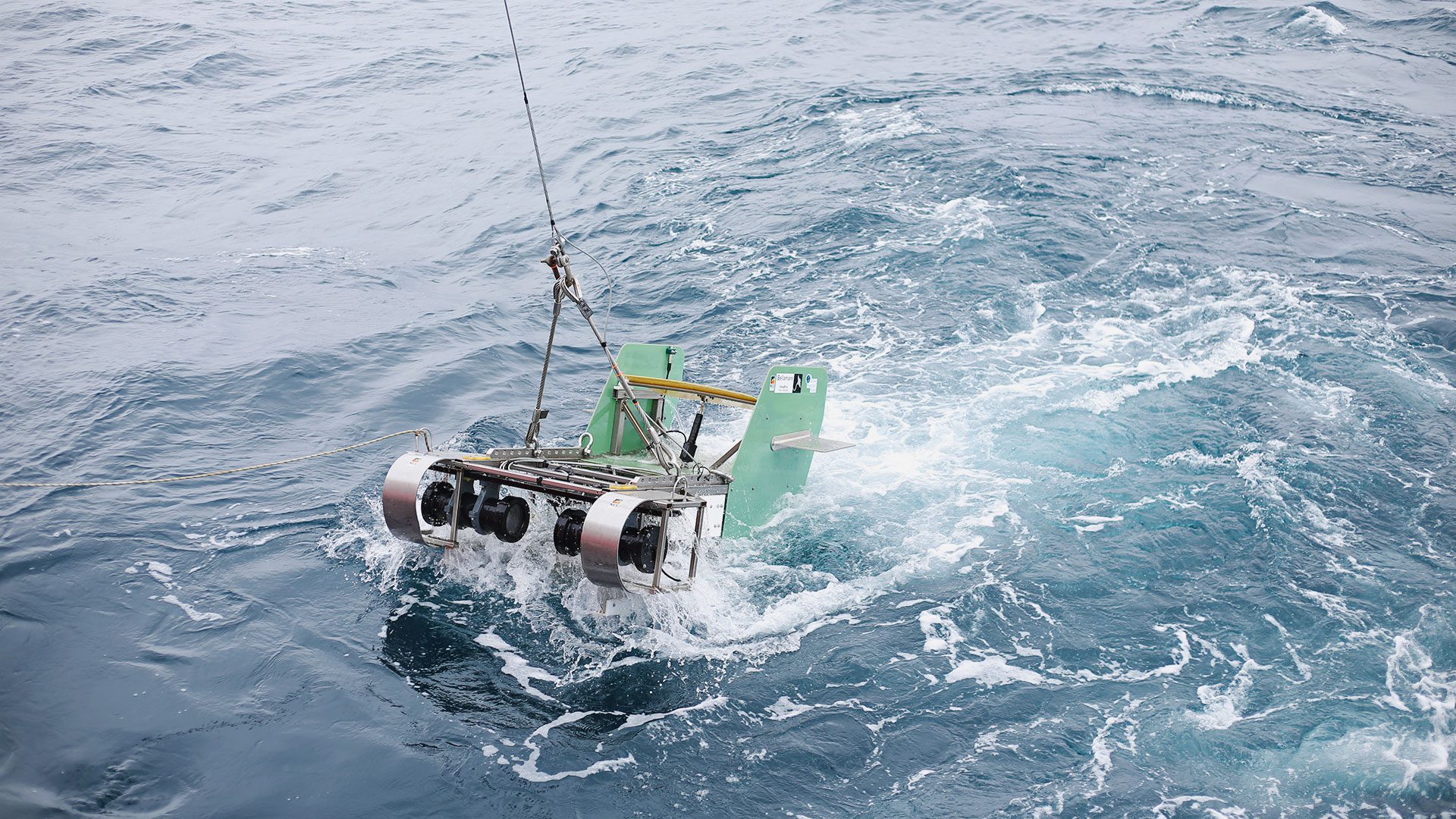

Over the past few years, however, new technologies are making this region more accessible than ever before, helping scientists unravel new information about its inner workings. Cutting-edge autonomous vehicles, animal tags, cameras, and acoustic systems can now collect massive amounts of information from the zone’s deep waters in a short period of time, cranking out multiple terabytes of data in a single deployment. The relative ease of gathering this much information is a boon for scientists trying to understand the twilight zone, but poses a complex challenge: how do we extract meaningful science from such sprawling datasets?

Some researchers at WHOI are adopting powerful new tools that might help. Using a specific flavor of artificial intelligence called deep machine learning, which works a bit like the human brain, they’ve created computer software that can analyze staggering amounts of data and highlight subtle patterns that would take human researchers decades to reveal.

Stingray is towed behind a research vessel to capture images and acoustic information in the twilight zone. (Photo by Marley Parker, © Woods Hole Oceanographic Institution)

Biologist Heidi Sosik uses these AI techniques extensively in her work. As the lead scientist for WHOI’s Ocean Twilight Zone project, she studies plankton, the tiny organisms that make up the base of the zone’s food webs, and is revealing how those tiny creatures fit into the twilight zone’s ecosystem. It’s a complex question: in addition to providing food for larger animals, plankton also play a part in moving carbon from the surface into deep water, which ultimately helps to regulate global climate.

To understand more about how these organisms work in the twilight zone— where they go, how much they reproduce, which animals are eating them, and so on—Sosik has to figure out not only the estimated amount of plankton at different depths throughout the day, but also the specific species they represent. She’s developed two new technologies that work in concert to achieve those goals. The first, a high-speed towed camera system, can record 15 frames per second, capturing millions of images of plankton, small fish, and gelatinous animals that pass in front of its lens. The second technology, a specially-designed neural network algorithm, can then sort through those images, flagging individual organisms and grouping them by species or genus.

“The AI is used mostly to find targets in images, and to classify what kind of organism it may be. In some cases, we can even use it to tell us whether those organisms are alive or dead or being eaten by something else if that’s evident in the image,” Sosik says.

It’s a vast improvement over the way that plankton biologists had to work just a generation ago, Sosik adds: “Before high-volume imaging and AI, the best we could do was to pull a discrete water sample from the ocean and have a human look at it through a microscope. This technology gives us many orders of magnitude more information, while doing it faster than any human could.”

While the technique sounds almost like magic, creating AI software that can achieve these goals isn’t trivial. Much of the work comes in the preparation, rather than the software itself: first, scientists have to create an annotated dataset—effectively a huge trove of reference material that shows each known species in multiple angles and settings—and use those examples to “train” the AI in advance. Using that information, the software’s algorithm can generate a complex set of rules to identify each organism in the wild. It’s a bit like studying photos of a stranger before trying to spot them in a busy restaurant.

Thanks to AI’s ability to process huge numbers of images, Sosik is getting a window into all sorts of large-scale phenomenon: how plankton vary over large distances; how the distribution of a particular species or genus changes in depth and in horizontal distances; and how the behavior of individual species may differ. “If we suspect a certain species is a vertical migrator that comes to the surface at night and goes back down into deep water during the day, we can actually quantify that with this approach,” she says. “It’s really a game changer for our field.”

A number of Sosik’s colleagues working on the OTZ project are also adopting AI to turbocharge their research. Andone Lavery, an acoustical oceanographer at WHOI, is using a similar approach to Sosik’s work. Instead of images, however, she’s using AI methods to find patterns in acoustic information.

Ocean acoustician Andone Lavery points to the deep scattering layer seen on computers aboard R/V Neil Armstrong—a dense population of creatures that migrate from the Ocean Twilight Zone to feed at the surface every night. (Photo by Daniel Hentz, © Woods Hole Oceanographic Institution)

Lavery specializes in using sonar and other acoustic systems to “see” into the deep ocean. Sound, she notes, is an incredibly useful tool for spotting organisms at a distance, and can provide a broad snapshot of diversity of life inside large volumes of water. To do this, Lavery sends out a pulse of sound that sweeps through many different frequencies, then listens for its echo in the water. Based on the properties of that echo, it’s possible to learn not only an organism’s location, but a bit about the physical properties of its body as well (large fish, for instance, will scatter certain specific frequencies, whereas a swarm of krill will scatter others).

At the moment, Lavery says, the information that acoustic signals can provide about the types of animals in the twilight zone is pretty sparse—at best, she’s able to tell the rough size and type of animal (fish, invertebrate, etc.) but can’t get much more specific. Even with those limits, however, Lavery’s AI techniques will still provide data that give scientists a much deeper understanding of the twilight zone.

“If we can use AI to find subtle patterns in our acoustic data, we may be able to connect that patterns to a specific genus or species in more detail,” she says. Like Sosik’s work, however, the major challenge for Lavery is in training a deep learning network to provide those answers. At the moment, there isn’t much information on the acoustic difference between a swordfish or a tuna, for instance, or between krill and other invertebrates.

“This is where I keep coming back to my biology colleagues,” says Lavery. “Based on the acoustic data, if I tell them that I’m seeing a fish of a certain size that has a swim bladder, would they be able to tell me what kind of fish it is, or how much biomass is associated with it?” she asks. With that information, Lavery adds, it may be possible to construct a broad overview of what lives in the twilight zone using only acoustic signals.

While one source of data alone won’t reveal the mysteries of the ocean twilight zone, the combined efforts of multiple scientists, each processing data through powerful AI tools, will lead to a much better picture of how the zone’s biological processes actually work—and how they in turn affect the rest of the planet, Sosik notes.

“There are so many ways that AI is enabling our understanding of the twilight zone,” she says. “Ultimately, that understanding is going to help humans make better decisions about how to protect those organisms, and how to be better stewards of the natural world.”