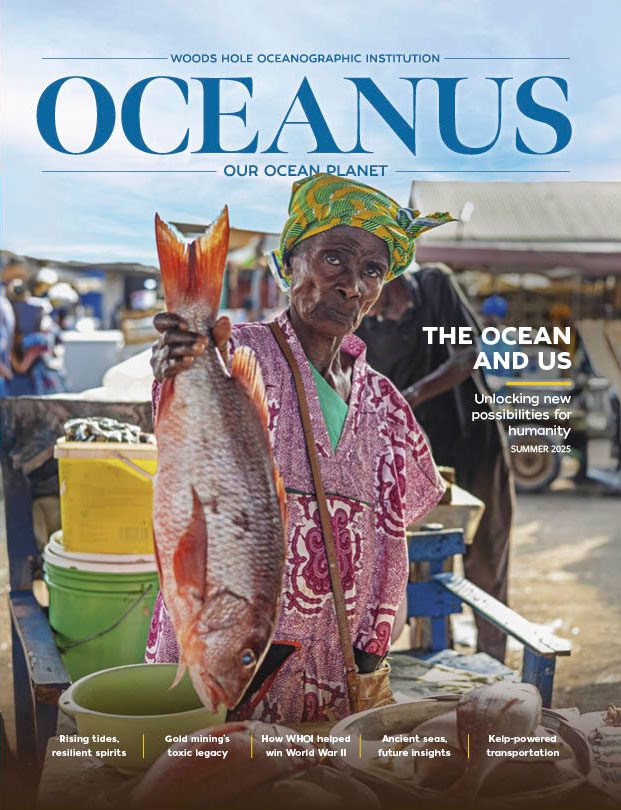

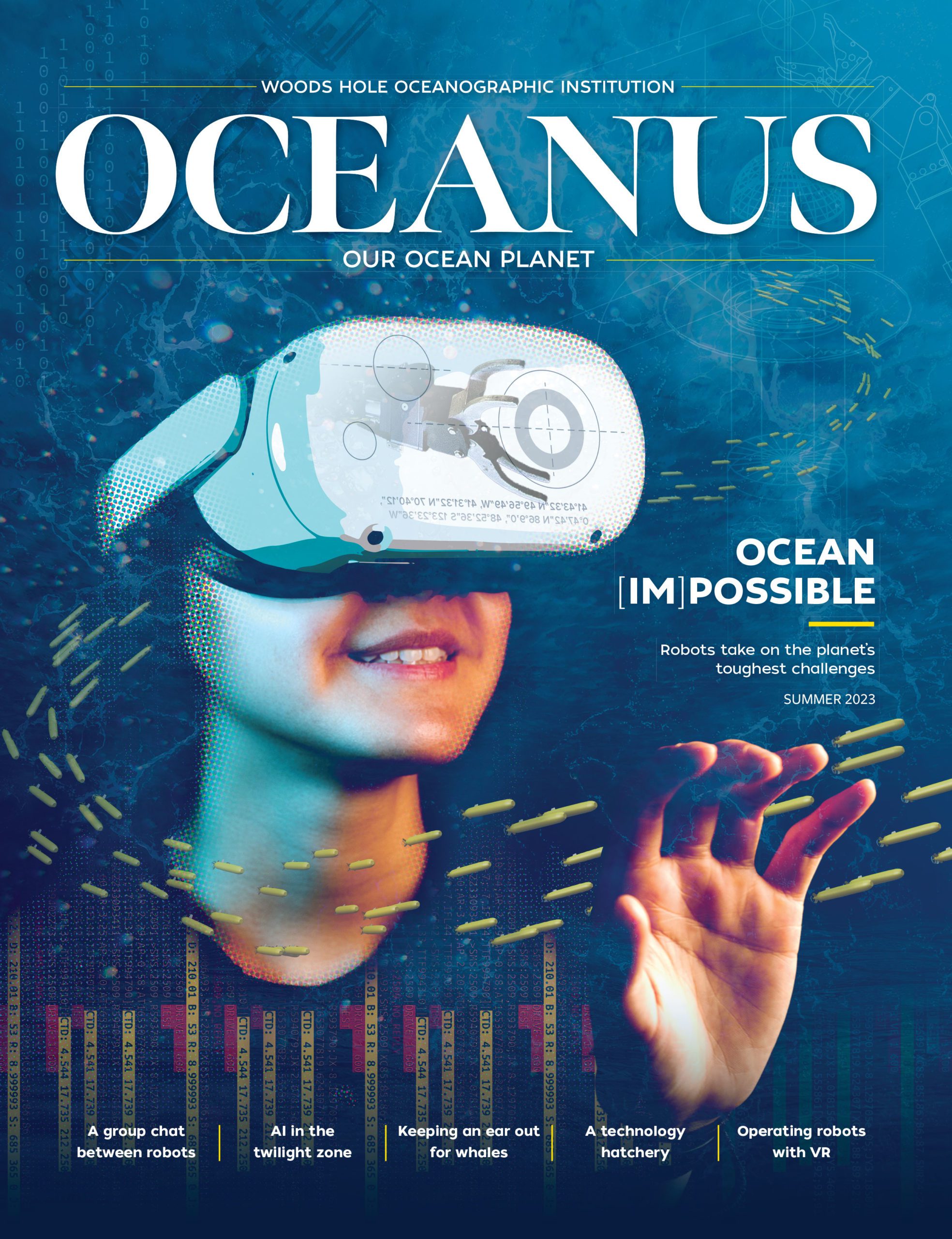

This article printed in Oceanus Summer 2023

This article printed in Oceanus Summer 2023

Estimated reading time: 5 minutes

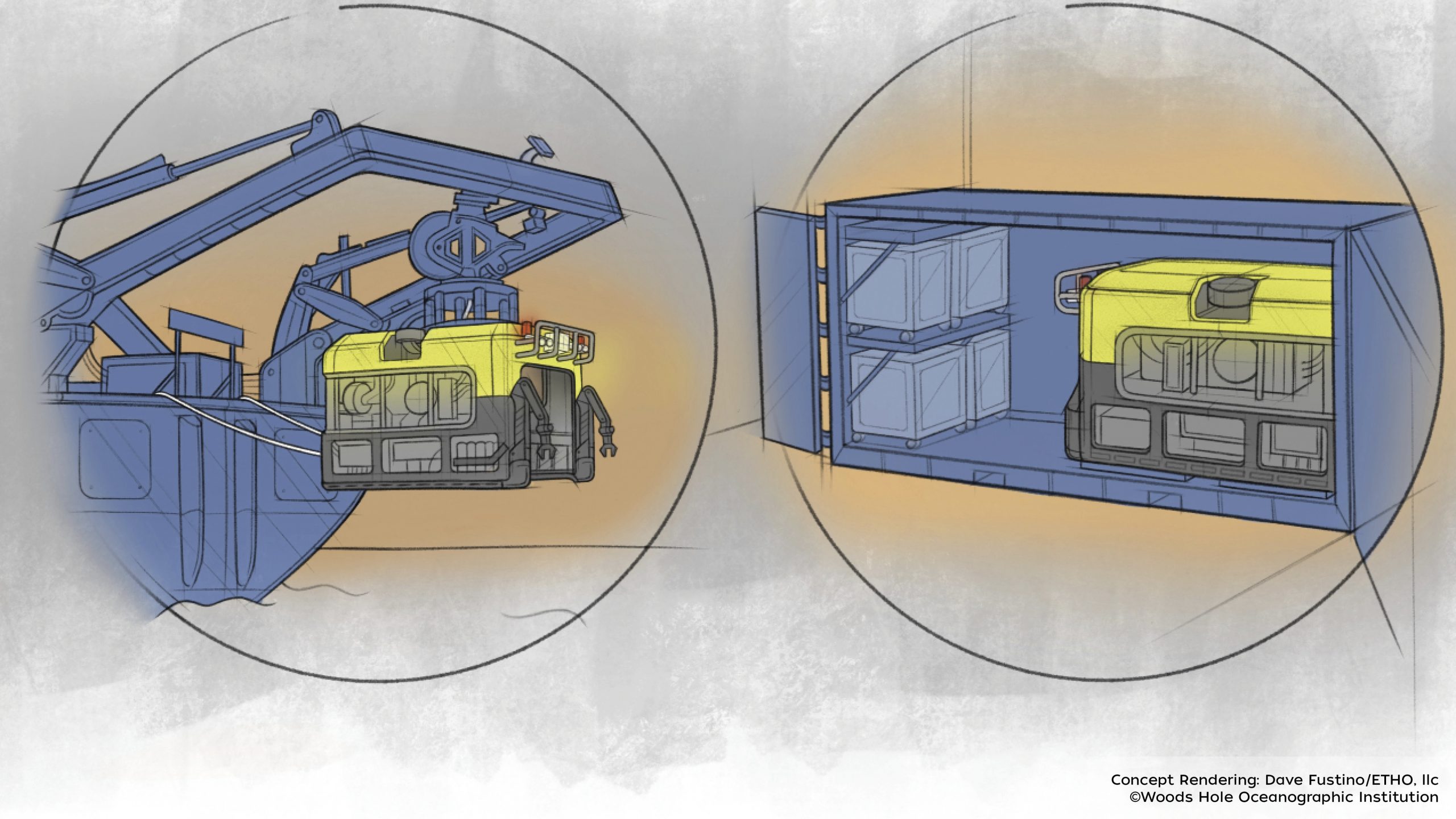

From her apartment in Cambridge, Mass., Amy Phung can see the future. Armed with two controllers, her face obscured by a virtual reality (VR) headset, she moves as deliberately as a Tai Chi master. As part of a NOAA Ocean Exploration research cruise, she’s controlling the hybrid remotely operated vehicle (HROV) Nereid Under Ice (NUI) as it takes a push-core sample from the seafloor over 3,000 miles away.

For Phung, virtual reality and robotics are a natural pairing that will accelerate exploration and facilitate human connection, opening doors to profound new ways of problem-solving. While still an undergraduate at Olin College of Engineering, she helped design a virtual reality control room for remotely operated vehicle (ROV) pilots at the Monterey Bay Aquarium Research Institute. In 2020, she came to WHOI as a summer student fellow to work on robotic manipulator arm calibration with scientist Richard Camilli. Now enrolled in the MIT-WHOI Joint Program, Phung is focusing her graduate work on automating the use of those robotic arms. She envisions a day when scientists will be able to ask a robot a question and it will find the samples it needs to answer that question all on its own.

“There’s a lot we don’t know about the ocean, and a remarkable percentage of it remains unmapped and unexplored.”

—MIT-WHOI Joint Program Student Amy Phung

“There’s a lot we don’t know about the ocean, and a remarkable percentage of it remains unmapped and unexplored,” Phung points out. “Robots have lots of potential for helping us automate some of that exploration, which will provide us with better insights into ocean processes and our impacts on them. Robots that can help identify where we need to intervene will help us make decisions about how to protect the environment.”

Automation not only rapidly scales up ocean exploration, Phung says, it can also increase access to the sea. Scientists who lack the time, ability, or funding to accompany ROVs on a ship could simply put on VR headsets to interact with the vehicles from land, saving time and money while collecting seafloor samples. Phung, who wore a shirt that said “AI for Accessibility” during our interview, envisions a day when even citizen scientists could use virtual reality to explore the ocean.

In a September 2021 test with the hybrid remotely operated vehicle (HROV) Nereid Under Ice off the coast of California, Phung was able to operate the vehicle remotely from her apartment in Cambridge, Massachusetts. For safety reasons, a pilot aboard the E/V Nautilus approved each request before the vehicle arm moved. (Credit: Video and VR visualization courtesy of Amy Phung, © Woods Hole Oceanographic Institution)

“This VR system is a really enabling technology,” she says. “I don’t want to claim too much, but with more careful research, it could help enable oceanographers with impaired vision, hearing, or mobility become more involved with ship-based operations.”

But how could untrained scientists realistically pilot an advanced underwater vehicle like Jason? Last summer, Phung tested a group of trained ROV pilots and a mixed group of students and scientists to see if they could pick up a block from a sandbox with a robot manipulator arm, using VR headsets and topside controllers. Pilots and novices both performed the task faster with the VR interface, while novices had a hard time with the normal piloting controls. Phung then made the test more challenging, reducing the camera frame rate to refresh every 10 seconds. Even the experienced pilots struggled with the lack of visual information, but with the VR assist, they were able to perform the tasks without errors or wasting time.

Phung’s tests show that VR could be helpful in poor-bandwidth situations and could also be applied to control untethered vehicles, thus increasing their range. But an even more pressing need is to perform tasks in low-visibility areas. One complaint Phung hears from ROV pilots is that when they disturb seafloor sediment, they have to wait up to several minutes for the cloud to dissipate before moving again. In future studies, Phung hopes to use acoustics to supplement or even replace the visual data coming in from ROV cameras. Not only would that reduce the power load required to explore the dark depths, it would also enable robots to differentiate between rocks and sand—even in pitch-black conditions.

Phung has been testing out the concept of automating ocean robot manipulator arms, like the one shown here, with the use of VR headsets. (Photo by Daniel Hentz, © Woods Hole Oceanographic Institution)

One day in not-too-distant future, Phung envisions a fleet of “autonomous intervention vehicles” touring the world ocean on a mission to answer science questions—and perhaps pick up on trends that humans would miss entirely.

“I think in the next ten years, people won’t have to specify the low-level objectives, like, ‘Okay, take a sample here, take a sample there.’ I think we’re going to move closer to research questions, types of queries like, ‘Okay, I don’t care what rock you sample, I just want to know, is this type of bacteria present in this environment, yes or no?’” says Phung. “They could make their own decisions about what’s interesting to sample, or maybe they just do the measurement right there.”

These capabilities could help scientists target their research and pick up the pace of science, not only on Earth, but on other planets.

“If we can get robots to the level where they’re independent enough to make their own decisions without human support, that makes it possible to explore oceans that are not on Earth, like on Europa and Enceladus,” she says.

For now, Phung’s virtual reality vision is bringing us all one step closer to the frontiers of exploration on our own ocean planet.

Phung’s research is supported by the National Science Foundation Graduate Research Fellowship and the Link Foundation Ocean Engineering & Instrumentation Ph.D. Fellowship. This particular project was supported by NSF NRI Grants IIS-1830500 and IIS-1830660, and NASA PSTAR Grant NNX16AL0G.